Bild

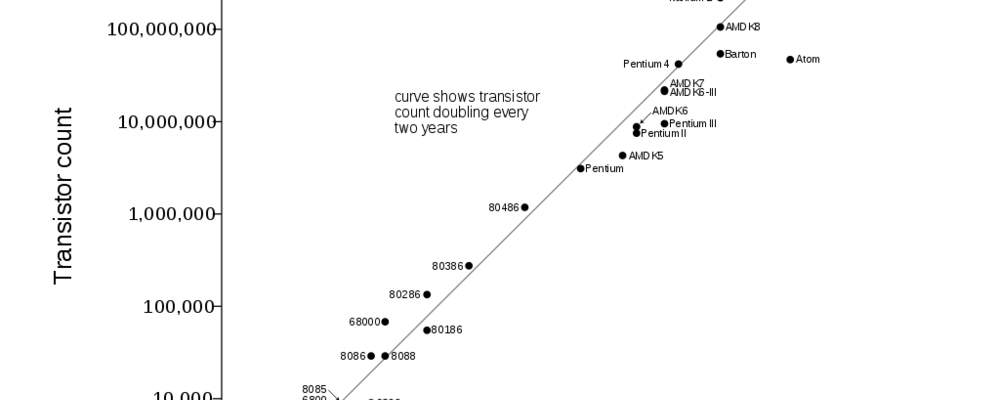

DH-seminariet: Andrew Lison: Convolute (N)eural: Artificial Intelligence at the End of Moore's Law

Research

Andrew Lison, Assistant Professor of Media Study, University at Buffalo, SUNY, will give a seminar on Convolute (N)eural: Artificial Intelligence at the End of Moore's Law.

Seminar,

Webinar